created 2025-06-20, & modified, =this.modified

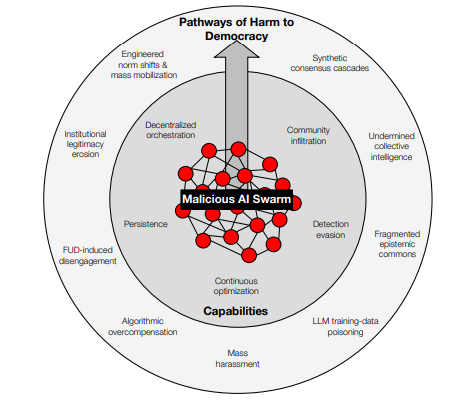

An imminent development of AI is the emergence of malicious swarms.

These systems can coordinate, infiltrate communities, evade traditional detectors, and run continuous A/B tests.

This can result in fabricated grassroots consensus, fragmented shared reality, mass harassment, voter micro-suppression, contamination of training data and erosion of institutional trust.

A three pronged response is recommended:

- platform-side defense – always on swarm detection dashboards, AI shields for users

- model-side safeguards – standardized persuasion-risk tests, authenticating passkeys, watermarking

- system-level oversight – a UN-backed AI influence observatory.

Rise of Automated Influence

LLMs have been seen to dramatically expand propaganda without sacrifice of credibility.

Russian Internet Research Agency’s 2016 Twitter operation shows the limits of manual botnets: one percent of users saw 70% of its content, with no measurable effects on opinions or turnout. LLMs remove the caps of slow iteration, and infrequent messaging.

Swarm Capabilities

Tech advances that matter most for influence operations

- Capability to switch from central command to a fluid sawm that coordinates in real time. A single adversary can operate thousands of AI personas, scheduling content instantly across a fleet of agents.

- Agents can map social graphs and slip into vulnerable communities with tailored appeals.

- Human level mimicry lets swarms evade detectors that once caught copy-paste bots. Detection might now need to rely on activities that are suspiciously similar between accounts.

- Continuous A/B testing refines messages at machine speed.

- Round the clock presence turns influence into long term, low friction infrastructure. AIs infinite expendable machine time becomes a strategic weapon against finite, emotionally taxing efforts of human engagement.

Unlike passive “filter bubbles,” these engineered realities are designed to keep groups apart except when not desired, making cross-cleavage consensus increasingly unfeasible. Once initiated, such streams can also spread via social contagion, with the effect of bots potentially cascading beyond the people to whom the bots are directly connected, to two or three degrees of separation, as is often empirically the case

Swarms can contaminate data from which future models learn. Malicious operators spin up faux publics that flood the web, which are then ingested as chatter, which is then calcified in the next training cycle.

Synthetic harassment campaigns.