created, $=dv.current().file.ctime & modified, =this.modified

tags:engineeringfailurey2025complexity

Drift into failure : from hunting broken components to understanding complex systems

Why am I reading this?

Curious about complex systems and failures. The wording here drift. On all the computer systems I work on, the future state is known: failure. This is on my mind at work on a server and when I save this document.

This idea of complex systems, and the human mind being obsessive over parts instead of relationships in the event of failure is something that has been on my mind.

I remember as a kid during gym class we were playing a unit on indoor lacrosse. It was a tied game and I had the opportunity to score a goal, and “win it for the team.” I tried quite hard but ultimately my find shot failed to score. This was viewed by me, and the team as a disappointment and a turning point. While it is true that had I scored, our team could have won - there is a network of other goals, and player actions that would have caused a win, or for my failure there being inconsequential.

Mine unfortunately was the most salient and recent, and thus harped on as the most costly error.

Viewing it this way, makes the entire process a bit arbitrary. The end score just a reduction of features, all the more interesting than a number.

Message

The growth and complexity in our society has got ahead of our understanding of how complex systems work and fail. Our technologies have gone ahead of our theories. We might understand something in isolation, but when connected these complexities multiply and we are often cut short.

Failure is Always an Option

Accidents are the effect of a systematic migration of organizational behavior under the influence of pressure toward cost-effectiveness in an aggressive, competitive environment.

The “accidental” seems to become less obvious, and the roles of human agency, decision-making and organizational trade-offs appear to grow in importance.

The central, common problem is one of culprits, driven by production, expediency and profit, and their unethical decisions.

Cartesian-Newtonian epistemology = rationalism, reductionism, belief that there is the best (optimal) decision, solution, theory, explanation of events.

Rational decision maker optimum (Subjective Expected Utility Theory)

- completely informed: knows of all possible alternatives and which courses of action will lead to which alternatives.

- capable of objective, local analysis of all available evidence on what would constitute the smartest alternative and can see the finest differences between choice alternatives.

- DM is fully rational and able to rank utility relative to goals DM finds important.

Tech has developed quicker than theory

Analyses of breakdowns in complex systems remain depressingly linear, depressingly componential.

One of the beautiful things about complexity is that we couldn’t design it, even if we wanted to.

in a crisis, all correlations go to 1.

Complexity, Locality and Rationality

Direct, unmediated (or objective) knowledge of how a whole complex system works is impossible. Knowledge is merely an imperfect tool used by an intelligent agent to help it achieve its personal goals.

In complex systems (which our world increasingly consists of) humans could not or should not even behave like perfectly rational decision-makers.

Optimizing decisions and conditions locally can lead to global brittleness.

Drift occurs because complex systems can exhibit tendencies to drift into failure because of uncertainty and competition in their environment. Adaptation is driven by the need to balance resource scarcity and cost pressures with safety.

Drift occurs in small steps. Small deviations from normalcy, with decisions setting precedence.

BP gradually accepted “run to failure” where parts would not be replaced preemptively.

In a complex system, however, doing the same thing twice will not predictably or necessarily lead to the same results.

Drift itself is the result of constant adaptation and the growing or continued success of such adaptation in a changing world. Drift is an emergent property of a systems adaptive capacity.

Post-structuralism stresses the relationship between the reader and the text as the engine of “truth.” Reading in post-structuralism is not seen as the passive consumption of what is already there, provided by somebody who possessed the truth and is only passing it on. Rather, reading is a creative act, a constitutive act, in which readers generate meanings out of their own experience and history with the text and with what it points to.

Features of Drift

NOTE

The airplane story at this segment is terrifying.

the jackscrew-nut assembly that holds the horizontal stabilizer had failed, rendering the aircraft uncontrollable.

But such accidents do not happen just because something suddenly breaks. There is supposed to be too much built-in protection against the effects of single failures. Other things have to fail too.

Crews without guidance would experiment and improvise, possibly making things worse.

Finally, the investigation noted shortcomings in regulatory oversight by the Federal Aviation Administration, and an aircraft design that did not account for the loss of the acme nut threads as a catastrophic single-point failure mode.

The idea that failure can be explained as looking for other failures leaves important questions unanswered.

A first question is quite simple. Why, in hindsight, do all these other parts (in the regulator, the manufacturer, the airline, the maintenance facility, the technician, the pilots) appear suddenly “broken” now? How is it that a maintenance program which, in concert with other programs like it never revealed any fatigue failures or fatigue damage after 95 million accumulated flight hours, suddenly became “deficient?” The broken parts are easy to discover once the rubble is strewn before our feet. But what exactly does that tell us about processes of erosion, of attrition of safety norms, of drift toward margins? What does the finding of broken parts tell us about what happened before all those broken parts were laid out before us?

Why weren’t these deficiencies addressed or treated as deficiencies?

Even before a bad outcome has occurred, indications of trouble are often chalked up to unreliable components that need revision, double-checking, more procedural padding, engineered redundancy. And this might all help. But it also blinds us to alternative readings. It deflects us from the longitudinal story behind those indications, it downplays our own role in rationalizing and normalizing signs of trouble. This focus on components gives us the soothing assurance that we can fix the broken parts, shore up the unreliable ones, or sit down together in a legitimate-sounding or expert-seeming committee that decides that the unreliability of one particular part is not all that critical because others are packed around to provide redundancy.

The work in a complex system is bounded by three types of constraints

- economic boundary, where the system cannot sustain itself financially.

- workload boundary, beyond which people or tech cannot perform the task they are supposed to.

- safety boundary, beyond which the system will functionally fail.

Starting from a lubrication interval of 300 hours, the interval at the time of the Alaska 261 accident had moved up to 2,550 hours, almost an order of magnitude more. As is typical in the drift toward failure, this distance was not bridged in one leap.

Theorizing Drift

It takes more than one error to push a complex system into failure. Theories explaining failure by mistake or broken component (Cartesian-Newtonian) are overapplied and overdeveloped.

Exegesis is an assumption that the essence of the story is already in the story, ready formed, waiting to be discovered. All we need to do is to read the story well, apply the right method, use the correct analytic tools, put things in context, and the essence will be revealed to us. Such structuralism sees the truth as being within or behind the text.

Eisegesis is reading something into a story. Any directions in adaptations are of our own making. Post-structuralism stresses the relationship between the reader and the text as the engine of truth. Reading is not passive consumption of what is already there, provided by somebody who possessed the truth, passing it on. Reading is a creative act. Readers generate meanings out of their own experience and history with text.

Systems thinking is about

- relationships, not parts

- the complexity of the whole, not the simplicity of the carved out bits

- nonlinearity and dynamics, not linear cause-effect

- accidents that are more than a sum of broken parts

- understanding how accidents can happen when no parts are broken, or no parts are seen as broken.

High Reliability Theory

Components of a high-reliability organization

- Leadership commitment

- Redundancy and layers of defense

- Entrusting people at the sharp edge to do what they think is needed for safety

- Incremental organizational learning through trial and error, analysis, reflection and simulation. Should not be organized centrally, and should be distributed locally as well as decision making.

Systems Thinking

Mechanistic thinking about failures means going down and into individual “broken” components. Systems thinking means going up and out: understanding comes from seeing how the system is configured in a larger network of systems, tracing the relationships with those, and how those spread out to affect and be affected by factors laying far away in time and space from things went wrong.

In complex systems, just like in physics, there are no definite predictions, only probabilities.

Accidents are emergent properties of complex systems.

Diversity

Diversity is critical for resilience. One way to ensure diversity is to push authority about safety decisions down to people closest to the problem.

Tactics to manage Drift

- Small steps provide opportunity for reflection on what this step can mean for safety.

- Small steps don’t generate defensive posturing when they are question, and cheap to rollback.

- Outside hiring provides opportunity to calibrate practices and correct deviance. Rotation of teams works.

- Unruly technology has been seen as a pest that needs tweaking till it becomes optimal. Complexity theory suggests unruly tech is not an unfinished product but a tool for discovery.

With Regards to The Rehearsal S2

In the conclusion of S2E02 -

Yeah — there’s “performance” on both sides of the table. Some would be bolstered by the hard truth, some artists would better skip the audition and hone their craft, some would love encouragement.

I think at the core of all of this, is humans are complex networks of interaction that are also dependent on one another. Complex systems cannot be reduce to individual parts so easily, such as when indicating cause of an aviation accident incident (this is covered in the book, “Drift into Failure” by Sidney Dekker, which coincidentally covers aviation disaster as a case study). Saying something like “the crash was caused by a loose screw” fails to capture the whole of systems that caused the “drift” into a particular state. It focuses on parts instead of relationships.

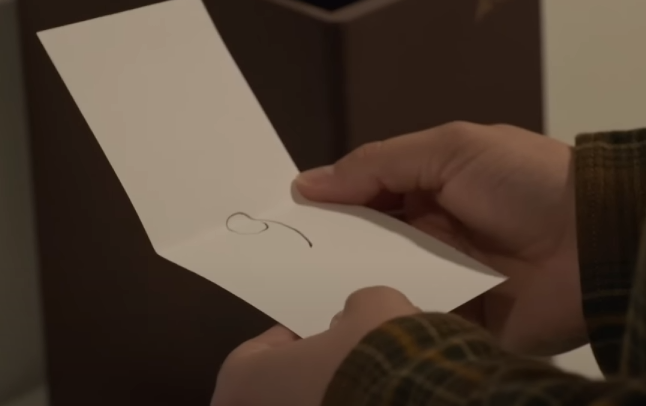

The 9 to 6 transformation, captures the folly of distilling something into a scale. In the end of the day, it’s just a number that he’s attributing something to.

Leading up to the reveal, we all had a number in mind we thought was in the invisible box.

Is your 6, my 9?

He’s really left with nothing conclusive from this experiment, simply observing a number. But things were felt.