created 2025-03-23, & modified, =this.modified

tags:y2025designarchitecturevirtual-spaces

Why I’m reading

Returning to studying virtual spaces and virtual architectures. Digital worlds etc.

Introduction

Architects tend to be late in embracing technological change.

Vitruvius’s De Architectura

De architectura (On architecture, published as Ten Books on Architecture) is a treatise on architecture written by the Roman architect and military engineer Marcus Vitruvius Pollio and dedicated to his patron, the emperor Caesar Augustus, as a guide for building projects. As the only treatise on architecture to survive from antiquity, it has been regarded since the Renaissance as the first known book on architectural theory, as well as a major source on the canon of classical architecture.

Vitruvius sought to address the ethos of architecture, declaring that quality depends on the social relevance of the artist’s work, not on the form or workmanship of the work itself. Perhaps the most famous declaration from De architectura is one still quoted by architects: “Well building hath three conditions: firmness, commodity, and delight”. (modern sense: sturdy, useful and beautiful.)

the high rises of Gothic spires and pinnacles were so daring and original that we still do not know how they were built (and we would struggle to rebuild them if we had to use the tools and materials of their time)

Accurate?

For houses, unlike automobiles or washing machines, can hardly be identically mass-produced: to this day, with few exceptions, that is still technically impossible.

Late 20th century postmodernists - every human dwelling should be a one-off, a unique work of art, made to measure and made to the order like a bespoke suit.

Thought

Even if the shape itself is homogenized at the start, with architectural drift and furnishing interiors, there is some variation.

Text says digital mass customization was the idea of architects – “designers and architects—not technologists or engineers, not sociologists or philosophers, not economists or bankers, and certainly not politicians, who still have no clue about what is going on.”

rel:Computer Architecture, Design, Love - we once used computers to mimic the old way, but now we use them for non-human process:

At the beginning, in the 1990s, we used our brand-new digital machines to implement the old science we knew—in a sense, we carried all the science we had over to the new computational platforms we were then just discovering. Now, to the contrary, we are learning that computers can work better and faster when we let them follow a different, nonhuman, post-scientific method; and we increasingly find it easier to let computers solve problems in their own way—even when we do not understand what they do or how they do it.

The Second Digital Turn

Microscopes and telescopes, in particular, opened the door to a world of very big and very small numbers,

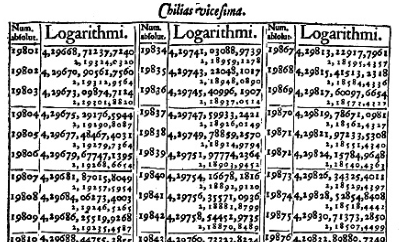

Logarithms were one of the most effective data-compression technologies of all time.

Laplace, Napoleon’s favorite mathematician, famously said that logarithms, by “reducing to a few hours the labor of many months, doubled the life of the astronomer.”

Logarithms can serve some practical purpose only when paired with logarithmic tables, where the conversion of integers into logarithms, and the reverse, arelaboriously precalculated. Logarithmic tables, in turn, would be useless unless printed.

Many repetitive calculations were centralized, then distributed.

Logarithms are the quintessential mathematics of the age of printing; a data-compression technology of the mechanical age.

The alphabet is an old and effective compression technology for sound recording. Alphabetic notation converts the variation of sounds produced by human voice (and sometimes a few other sound noises as well) into a limited number of signs, which can be easily recorded and transmitted across space and time.

Thought

Variation points to identity, hence why an artist would want originality in something they make. So the call for subversion and the anti-.

In tech, when browsing the web you end up with a browser footprint, simply based on the variation in signatures that you present when accessing the site (client specific features, such as browser extensions installed). In the past, even thoughts of thinks like battery levels could be a traceable event.

Does my original point make sense? Does variants point to identity, or is it more of a second order effect - through identity you have these consequences, such as a unique environment?

This strategy worked well for centuries, and it still allows us to read transcripts from the living voices of famous people we never listened to and who never wrote a line, such as Homer, Socrates, or Jesus Christ.

In time, the alphabet was adapted to silent writing and silent reading, and its original function as a voice recorder was lost, but its advantages as a data-compression technology were not. The Latin alphabet, for example, records all sounds of the human voice, hence most conveyable thoughts of the human mind, using a combination of less than thirty signs.

Thought

This doesn’t seem accurate but I understand the sentiment.

Don’t Sort: Search

Gmail was released on April 1st 2004, with the tagline “Search don’t sort” which promised to use search to find the exact message.

Aristotle’s classifications divided all names into ten categories, known in the middle ages as predicamenta

Predicamenta

- substance - essence, that which cannot be predicted or said to be anything. That particular tree are substances.

- quantity - The extension of an object.

- qualification, or quality - characterizes an object (black, white, grammatical, sweet)

- relative - the way an object relates to another (half, master)

- where - position in relation to surroundings

- when - position in relation to events

- relative position - condition of rest from an action (sitting)

- having a state - a condition of rest from being acted on (armed)

- doing an action - The production of change in some object

- being affected

With classifications construed as cascades of divisions and subdivisions, encyclopedias (which originally, and etymologically, referred to a circle, or cycle, of knowledge) started to look less like circles and more like trees, with nested subdivisions represented as branches and sub-branches.

etymology:

1530s, “general course of instruction,” from Modern Latin encyclopaedia (c. 1500), thought to be a false reading by Latin authors of Greek enkyklios paideia taken as “general education,” but literally “training in a circle,” i.e. the “circle” of arts and sciences, the essentials of a liberal education; from enkyklios “circular,”

Segment alluding to Bibliotheca universalis where Gessner devised a universal method of classification by treelike subdivisions pegged to a numeric ordering system—an index key which could also have served as a call number, although it does not seem it ever was. That could hardly have happened in the south of Europe anyway, as all of Gessner’s books, particularly his bibliographies, were immediately put in the Index of Prohibited Books by the Roman Inquisition.

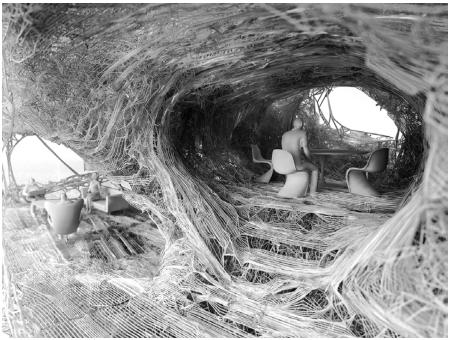

Gilles Retsin and Softkill Design, Protohouse. Structural optimization (by iterative removal and addition of material) aimed at obtaining a minimal volume and uniform stress throughout a complex architectural envelope.

Artisans of pre-industrial times were not engineers; hence they did not use math to predict the behavior of the structures they made. When they had talent they learned it intuitively, by trial and error, making and breaking as many samples as possible.

By making and breaking (in simulation) a huge number of variations, at some point we shall find one that does not break, and that will be the good one.

Thought

I see an argument here. Maybe this the essence of Vibe coding (trash). We simply do not iterate fast enough and hold too dearly to getting a solution correct on the first try. Our tools are so definitive and we get invested. If you get a wrong answer, maybe you must just simulate and iterate, at increasing speeds. The tools afford rapid iteration, but the rate isn’t fast enough because a human must still evaluate and process.

Gilles Deleuze famously disparaged the abstract determinism of modern science, to which he opposed the heuristic lore of artisan “nomad sciences.”

Thought

The “tree” and “rhizome”. The tree is one part of the rhizomatic network. categorization, despite being insufficient.

Are there any felt differences with a design that is completely generative? If not, what is the point?

The artisan with an innate (through time and trial) understanding of his pot, versus a iteratively (generated) pot. Is there a difference in understanding of their creation? Does the artisan understand his pot any deeper?

Spline Making

All tools modify the gestures of their users, and in the design professions this feedback often leaves a visible trace: when these traces become consistent and pervasive across objects, technologies, cultures, people, and places, they coalesce into the style of an age and express the spirit of a time.

Thought

rel:Game Sound by Karen Collins where sound tech was difficult to distinguish as an aesthetic or limitation. When the style of a Nintendo sound was transplanted onto other hardware, the boundary became unclear.

Nothing shows the small data logic of the first digital age better than the spline-based curve.

Continuous curves were one of the novelties of early CAD/CAM computer graphics, and animation software.

Digital promised variation within mass production (which was previously uniform)

Starting from the early 1990s, the pioneers of digitally intelligent architecture argued that variability is the main distinctive feature of all things digital: within given technical limits, digitally mass-customized objects, all individually different, should cost no more than standardized and mass-produced ones, all identical.

Spline modelers can script free-form curves and clusters of points into perfectly smooth and curving lines.

1960s, Two French scientists working at French car companies - Pierre Bézier and Paul de Casteljau. They found two different ways to write down the parametric notation of a general, free-form continuous curve. Casteljau discovered it first, but Bézier popularized it, and thus we know Bézier curves.

On spline:

The term “spline” derives from the technical lexicon of shipbuilding, where it described slats of woods that were bent and nailed to the timber frame of the hull.

A spline is thus the smoothest line joining a number of fixed points.

As Bézier and de Casteljau both recount in their memoirs, the mathematical notation of curves and surfaces was meant to increase precision, reduce tolerances in fabrication, and save the time and cost of making and breaking physical models and mock-ups.

Few of today’s designers would use splines if they had to make each spline by hand, with math and paper, pencils and slide rule. This is one reason why free-form curves were seldom built in the past, except when absolutely indispensable, as in boats or planes, or in times of great curvilinear exuberance, such as the baroque, or the Space Age in the 1950s and 1960s.

Gehry’s Guggenheim Bilbao

Today this style is often called “parametricism,” Parametricism relies on programs, algorithms, and computers to manipulate equations for design purposes. Parametricism rejects both homogenization (serial repetition) and pure difference (agglomeration of unrelated elements) in favor of differentiation and correlation as key compositional values. The aim is to build up more spatial complexity while maintaining legibility, i.e. to intensify relations between spaces (or elements of a composition) and to adapt to contexts in ways that establish legible connections.

Today this style is often called “parametricism,” Parametricism relies on programs, algorithms, and computers to manipulate equations for design purposes. Parametricism rejects both homogenization (serial repetition) and pure difference (agglomeration of unrelated elements) in favor of differentiation and correlation as key compositional values. The aim is to build up more spatial complexity while maintaining legibility, i.e. to intensify relations between spaces (or elements of a composition) and to adapt to contexts in ways that establish legible connections.

The Rise and Fall of the Curve

A continuous line is described by the equation y=f(x) and includes an infinite number of points. Thus an equation compresses, almost miraculously, an infinite number of points into a short and serviceable alphanumerical script.

The claim is that computers, fed a raw, unstructured list of points, can search and retrieve without disorder, because unlike they can search without any prior sorting.

Thought

Something about this claim (compression) is disagreeable, but I can’t say why. Sorting on differently ordered data does have consequences.

But I think the point might be that given equal footing of a human and a computer, the computer will be less taxed by irregular data.

PostScript allowed (through Bezier curves), regular scaling of characters, where as in the past each glyph was a rasterized map of pixels (or with mechanical printers required replacement of mechanical parts.)

Michael Hansmeyer and Benjamin Dillenburger’s digital grotto. In spite of and against all appearances, the grotto was not carved from block (subtractive), but printed from dust (almost nothing) in an additive way.

This is a rather counterintuitive result, as we tend to think that decoration, or ornament, is expensive, and the more decoration we want, the more we have to pay for it. But in the case of Hansmeyer’s grotto, the details and ornament we see inside the grotto, oddly, made the grotto cheaper. (if we ignore the cost of designing the interior).

This is a rather counterintuitive result, as we tend to think that decoration, or ornament, is expensive, and the more decoration we want, the more we have to pay for it. But in the case of Hansmeyer’s grotto, the details and ornament we see inside the grotto, oddly, made the grotto cheaper. (if we ignore the cost of designing the interior).

New Frontier of Alienation, and Beyond

The opulent detailing require an inhuman or post-human level of information management, no one can notate 30 billion voxels without the use of a machinic or automatic tool of control.

Incidental Space - Neither designed nor scripted, Kerez’s walk-in grotto was 42 times enlarged, the cavity originally produced by a random accident inside a container the size of a showbox. Any accident can be replicated at scale.

Incidental Space - Neither designed nor scripted, Kerez’s walk-in grotto was 42 times enlarged, the cavity originally produced by a random accident inside a container the size of a showbox. Any accident can be replicated at scale.

Incidental Space, Kerez

On architectural design: every construction is the outcome of a series of traceable decisions. But for many buildings, these decisions all just accumulate without any relation to each other. The finished building, to a certain degree, represents a catalogue of the measures that were taken. But a holistic spatial experience or a cogent architectural statement can only come about when all the decisions in the design process are reciprocally determined by one another. In that way, they take on their own imperative. In other words, a decision no longer becomes a question of personal taste, but one of architectural consistency. It is no longer a question of personal authority; the decision takes on a generally valid character, comprehensible to anyone. In this way, the search for criteria becomes the actual work of design; decisions result from this. These criteria of judgment can in turn only be derived from an overall architectural problem, an idea, which must be further reconsidered with every successive decision. Every new architectural problem demands its own specific means of investigation and specific means of reflection for valid criteria to be derived from it. And if, for us, the architectural problem includes the search for alterity, or for the enigmatic, that changes nothing in this definition of architectural design. On the contrary, it confirms this definition by different means.

Many buildings, particularly in contemporary architecture, achieve a holistic character via a shortcut in the design process: they borrow from architecture that has already been built, from something that has already been holistically worked out. This was precisely the shortcut that was precluded for us in our contribution to the Biennale in the Swiss Pavilion, since we didn’t want the built space to refer to some other space. We didn’t want it to atrophy into mere illustration. Instead, the space was meant to assert itself as an event at a particular location, for a particular time. For this reason, there was no option to depend on any existing work of architecture to attain some measure of certainty and efficiency in the design process. Instead, with our goal of generating new experiences, we were forced to understand architectural design as an intellectual adventure, full of risk. Nonetheless, Incidental Space is emphatically not a space that has been created at random, or worse, a space that has generated itself.

The End of the Projected Image

Futurism, and cubism and expressions and architectural deconstructivism, all pointed towards the death of perspectival images in their quality to unite the avant-gardes.

Today technologies exist that can almost instantly record the spatial configurations of three-dimensional objects in space by notating how many points as needed in precise (x,y,z) coordinates.

The last encyclopedist of classical culture, Isidore of Seville stated famously on images, that images are always deceitful, never reliable, and never true to reality.

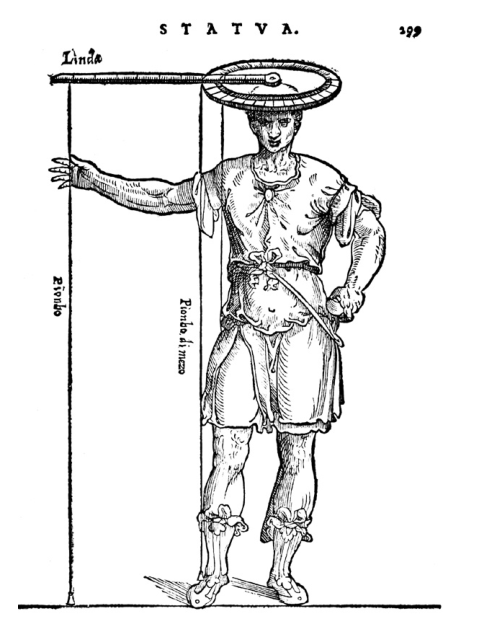

Around 1492, Leonardo had another, stronger argument to advocate the primacy of projected images over three-dimensional copies. Full-round sculptures are seen in perspective by whoever beholds them, he claimed, without any merit of their sculptors; but paintings are put into perspective by their painters (actually, into two perspectives, Leonardo famously claimed— one made with lines, the other with colors).

Alberti devised a measuring device for statuary replication, attempting to take down the spatial coordinates of as many points on a model as needed, the use this log alone to make copies. Different workshops could be tasked with reproducing different parts of the statue.

Now we have 3D printing, LIDAR, and tools like 123D Catch, which generates point clouds from stationary objects.

Three-dimensional models have replaced text and images as our tools of choice for the notation and replication, representation and quantification of the physical world around us: born verbal, then gone visual, knowledge can now be recorded and transmitted in a new spatial format.

The Participatory Turn That Never Was

Francis Galton discovered a statistical quirk in one of his last writings. In some cases, it seems possible to infer an unknown but verifiable quantity simply by asking the largest possible number of random observers to guess it, and then calculating the average of the answer. Random guesses to the weight of an ox were averaged, and all answers came closer to the actual weight that individual weights. The accuracy of the average increases in proportion to the number of opinions expressed, regardless of the expertise or specific information available to all observers.

The rules of grammar and syntax themselves are borne out of authority of precedent, as for the most part they formalize and generalize the regularities embedded in the collective or literary use of a language – a process that in the case of collective or literary use of a language unfolds over time and continues forever.

Digital objects appear to be in a state of permanent drift. It is never finished or ever stable, and will be functioning only in part, hence it is destined in some unpredictable way, always to be in part nonfunctioning.

Thought

This is true, even for something we think of as fixed like a computer program. Bugs in the code, exist. On large time scales, unpredictable input will always be registered (even if you have some spoken rule, or established business practice, if it isn’t directly applied it will drift in time.) I think of this uneasiness with a large amount of code I am unfamiliar with. On the surface, it may seem complete but what if deep within, there’s some critical flaw that will only be unearthed much later, after layers and layers of work is done. This has happened, even on the hardware level.

Modern digital software building is “aggregatory” or patched together by pieces/fragments and components.

This new, “aggregatory” way of digital making may equally affect buildings, insofar as digital technologies are implied in their design, realization, or both.

Economies Without Scale: Towards a Nonstandard Society

Fragmentation of the Master Narrative.

Computer-based design and fabrication eliminate the need to standardize and mass-produce physical objects, and in many cases this has already created a new culture of distributed making that some call digital craftsmanship.

Economies of Scale - In simple terms, economies of scale means that as a company produces more goods or services, the cost per unit decreases, leading to increased efficiency and profitability.