created 2025-04-25, & modified, =this.modified

It is estimated that data centers today consume about 200 terawatt-hours of energy every year, an amount expected to increase by an order of magnitude by 2030, if nothing is done to lower the energy needs of these systems

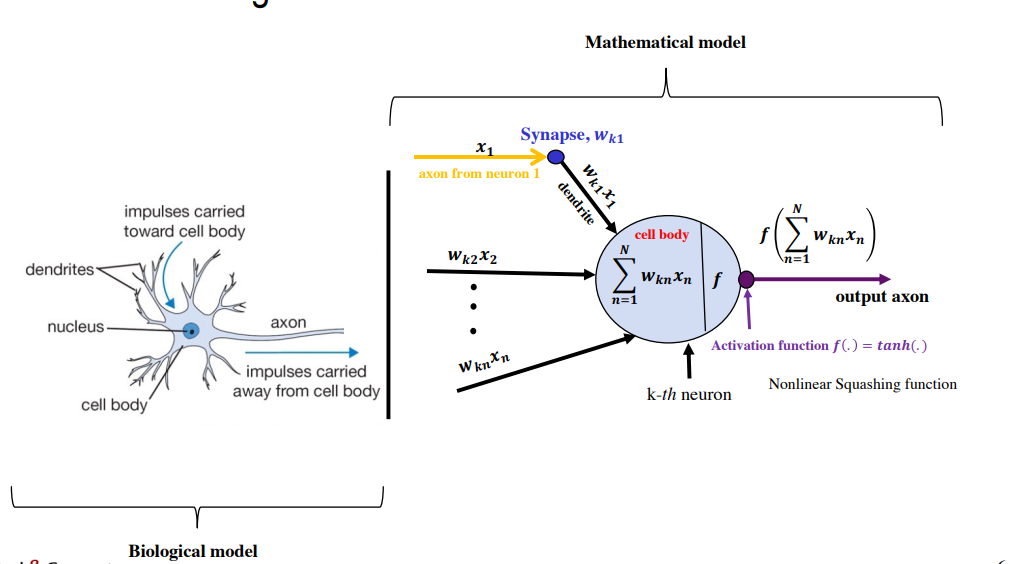

These systems are “inspired by” the human brain.

The human brain can perform feats of computing that no datacenter is capable of, while consuming energy at a rate of only 20W.

This brain-inspired computing paradigm, called neuromorphic computing, borrows the concept that information processing and storage in the brain appear to be co-located. This is contrary to the von Neumann conception of computing, where data processing is separated from where it is stored. This is the leading cause of the inordinate energy consumption in most computing systems today. It is inefficient because time and energy must be expended while shuttling data back and forth between processor and memory

The von Neumann architecture of computing requires the shuttling of instructions (programs) and data back and forth between memory and processor;

von Neumann bottleneck

Surely there must be a less primitive way of making big changes in the store than by pushing vast numbers of words back and forth through the von Neumann bottleneck. Not only is this tube a literal bottleneck for the data traffic of a problem, but, more importantly, it is an intellectual bottleneck that has kept us tied to word-at-a-time thinking instead of encouraging us to think in terms of the larger conceptual units of the task at hand. Thus programming is basically planning and detailing the enormous traffic of words through the von Neumann bottleneck, and much of that traffic concerns not significant data itself, but where to find it.

The use of the same bus to fetch instructions and data leads to limited throughput (data transfer rate) between the CPU and memory compared to the amount of memory. The CPU is continually forced to wait for the needed data to move to and from memory.

There problem can be sidestepped somewhat with parallel computing, but it it is less clear whether the intellectual bottleneck has changed much.

Neuromorphic Computing

Takes inspiration from the brain.

- About ~10^10 neurons

- each neuron connected with ~10^4 synapses

Computing in the brain is therefore performed within the memory itself, unlike how it is done in classical computing.

Cell Membrane is not fully insulating, it leaks some (Na+, K+, Cl-) ion currents.

Difference between classical and brain computing

| Human Brain | Digital Computer |

|---|---|

| Neurons and synapses are basic building blocks | Transistor logic blocks and memory are basic blocks |

| Async communication (no central clock) | Sync communication (high energy, with single central clock) |

| High plasticity | Most circuits have limited reconfig. |

| Neurons have ready access to synapses (memory) | Processor and memory are spatially separated, leading to lots of data being consumed shutting data back and forth. |

| Neurons in brain are massively connected | Not possible with CMOS tech |

| The human neocortex is estimated to have about 1.5-3.2^10 neurons and about 1.5x10^14 synapses | 1.2x10^12 transistors in Cerebras chip. |

| Memristor a type of electronic synapse. |

In electronic neuromorphic computing, the memristor is typically used in a crossbar configuration designed to perform vector-matrix operation - one of the basic operations in deep learning algorithms.

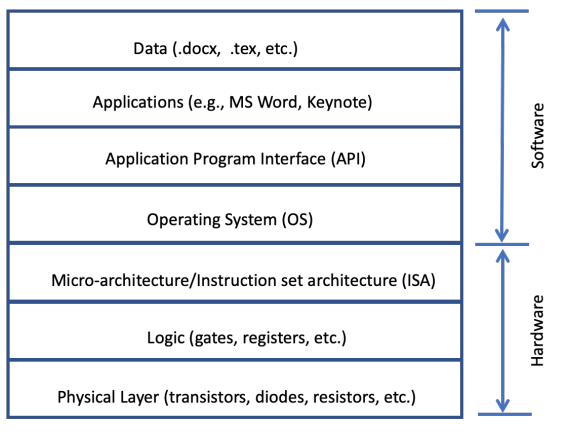

Challenges of incumbent computing framework. Drag of incumbent stack.

- neuromorphic computing requires its own clear layered abstraction that explains how hardware and software should interact.