created, $=dv.current().file.ctime & modified, =this.modified

tags:artai

rel: To Imagine

NOTE

I think this is going to be about latent space and what kind of images we get out of probing these boundary territories.

I wonder, what are some most uncommon images? In that, you can have a billboard or a commercial and that is one type of image that people will see with their millions of Eye Contacts. But what about the image that is buried? Like the deteriorated image that is in plastered in the sole of a boot that was buried in a landfill? What’s the image in a book, at the moment of nuclear detonation, in all the particles of a page annihilated and dispersed.

What is the image of the page not painted, or the page that will be painted in five years time, as you purchase your paints and cry your eyes out?

Wolfram “Because in AI we finally have an accessible form of alien mind.”

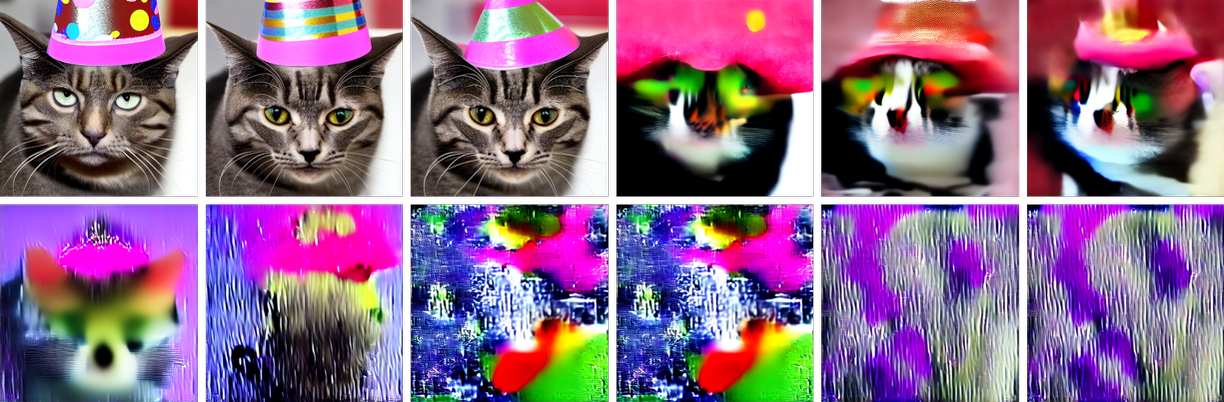

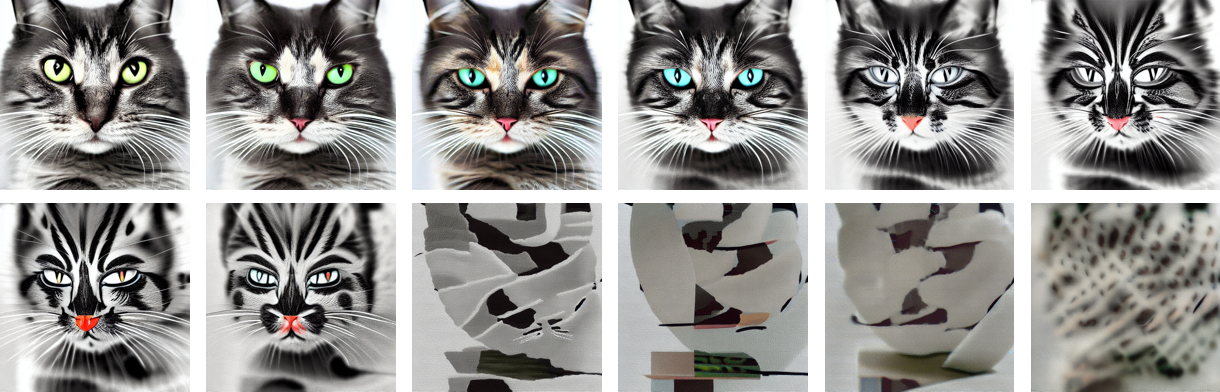

We ask a generative AI for a picture of a cat in a party hat and it produces an image we’d expect, as it is trained on this.

He shifts the internal weights of the net, and states that this is in effect a view into how an alien mind would see. “Artificial Neuroscience”

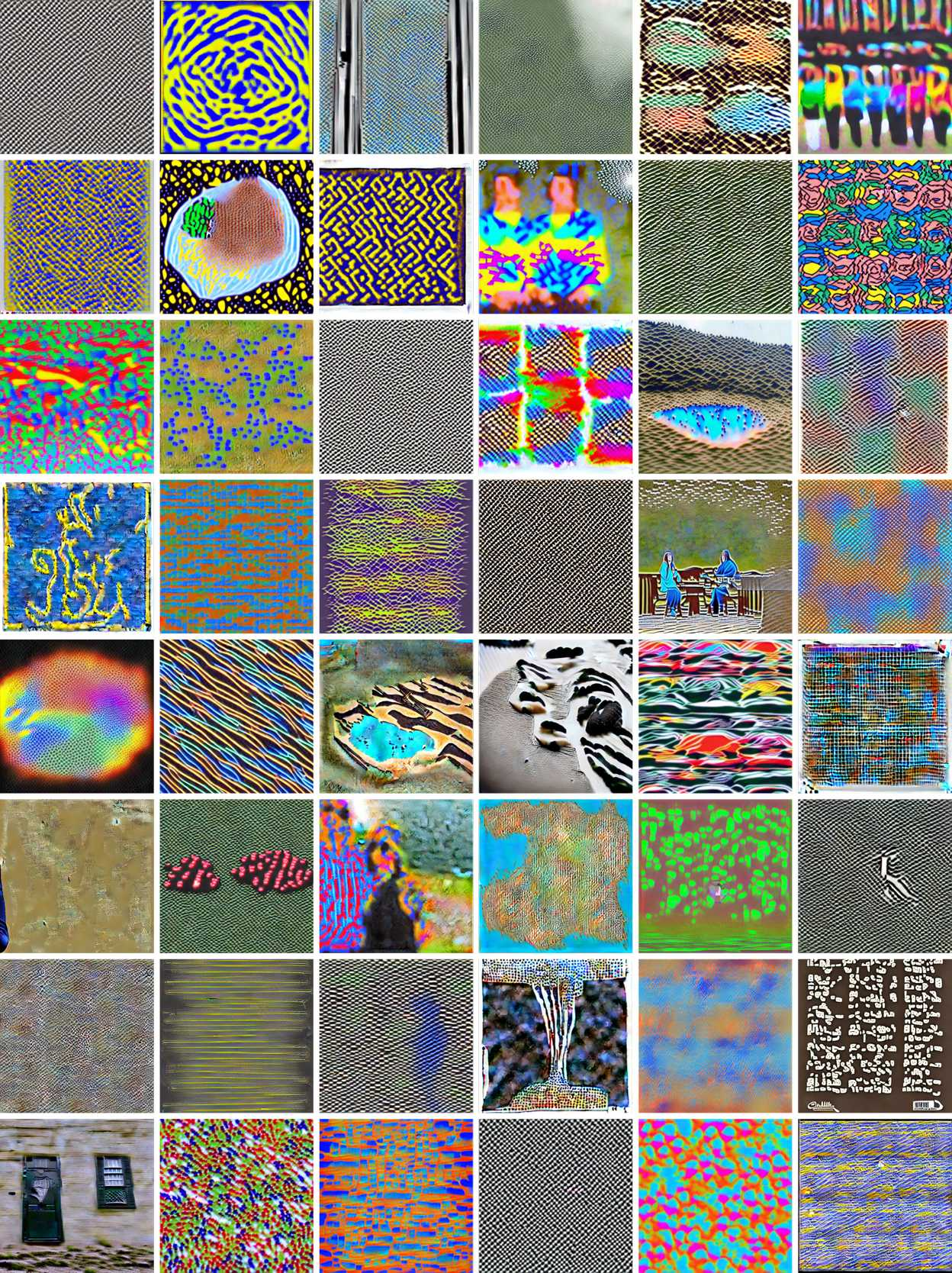

On “reasonable pictures”: images on the web aren’t random, there are regularities. Faces are mostly symmetrical. Green might be more probable at the bottom of the picture and blue at the top.

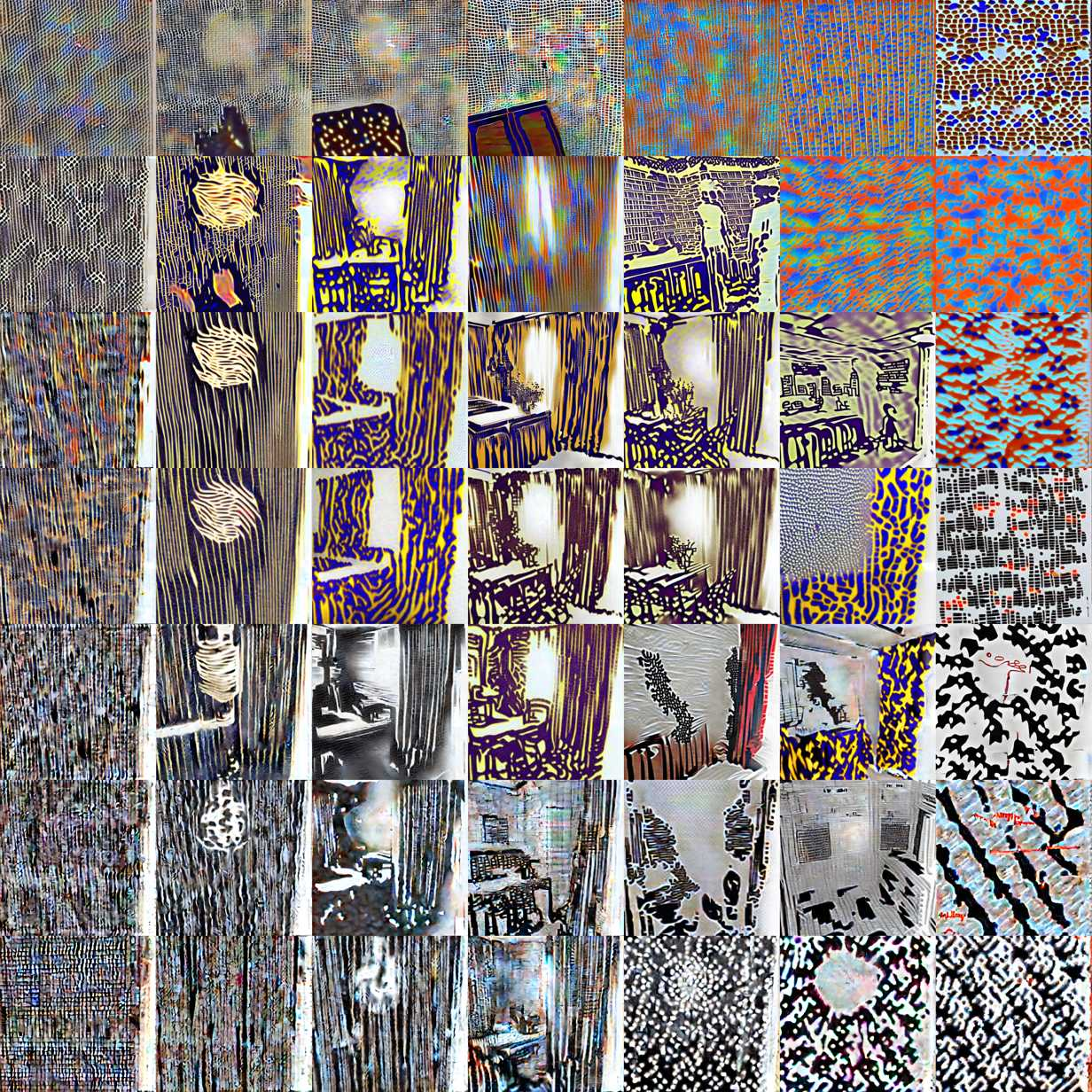

The random images at the top for us, will give way to some kind of structure, that often includes human form.

We don’t know how mental images are formed in human brains. But it seems conceivable that the process is not too different. And that in effect as we’re trying to “conjure up a reasonable image”, we’re continually checking if it’s aligned with what we want—so that, for example, if our checking process is impaired we can end up with a different image, as in hemispatial neglect.

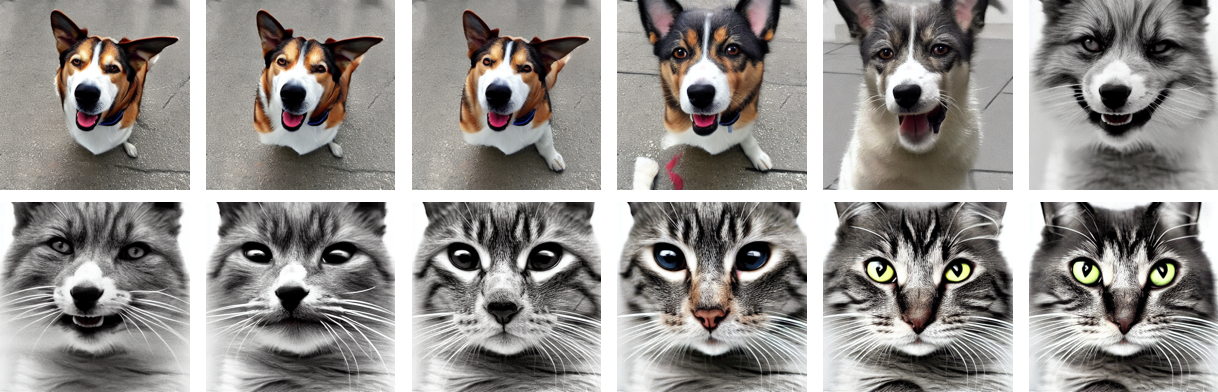

Interconcept Space

You can look at a nominally straight path through multidimensional space and see the transition from a “cat point” to a “dog point” in which the features of a cat morph into the features of a dog.

Thought

It doesn’t seem to make sense what percentage of an image is “cat” versus “dog” (so you can say we’re fully dog and see a dog, but at 50% traversal, what is said of the proportions of dog to cat.)

If we push “beyond” cat

But the fundamental story is always the same: there’s a kind of “cat Islands”, beyond which there are weird and only vaguely cat-related images—encircled by an “ocean” of what seem like purely abstract patterns with no obvious cat connection.

NOTE

Something about this characterization seems wrong.

I do like the idea that I can look at some extremely erratic, entropy riddled image of multicolored particles and actually be examining some abstruse concept that I haven’t encountered yet, which congeals into understanding and regularity with use.

Like: A cat is actually noise (if I look not like a human look but zip around connecting the cat skin cell to the cat voice, to the inside of its cheek, how it looked encased in magma), but understood.

Kind of like a truly orthogonal intellect of an alien encounter (solaris) versus the rests of the ideas (humanoid grey.)

But as an uninformed idiot, I feel these interconcept regions are just inchoate, noise because the concepts they were built on were more concretely classified on human-ese.

On the incomprehensible nature of the interconcept space:

It’s as if in developing our civilization—and our human language—we’ve “colonized” only certain small islands in the space of all possible concepts, leaving vast amounts of interconcept space unexplored.

rel Expression Dubstep Conveyed Conversation

Random point in concept space