created 2025-04-02, & modified, =this.modified

With a voice that doesn’t admit to not knowing

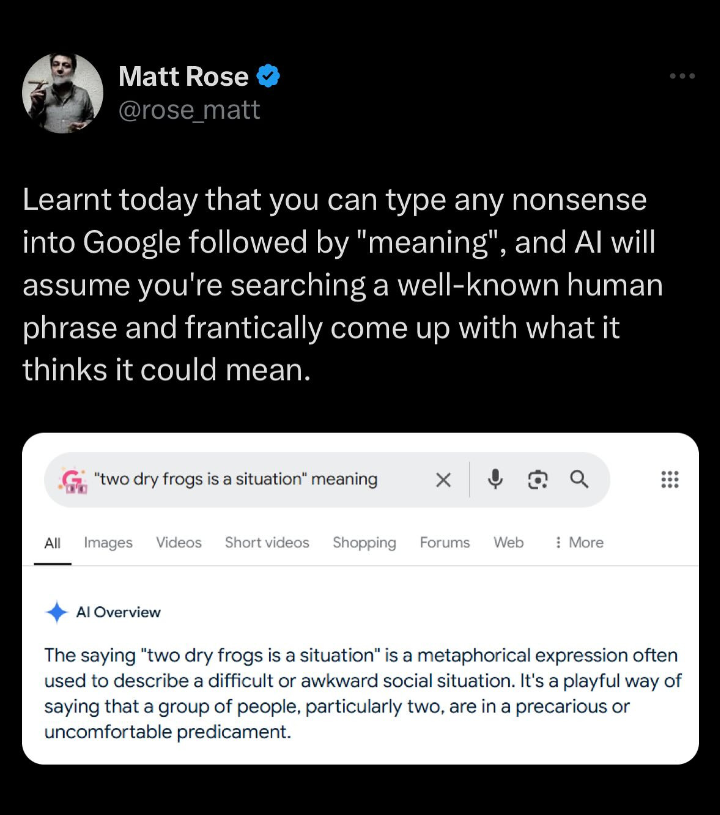

There’s a new information search loop for humans.

Previously, for the majority, you search and look for an answer to a question you have or area of research.

If you look and there’s not a concrete answer (for many people, just viewing the first page of search results), you presume that nobody has disseminated the idea widely. It hasn’t been captured publicly, enough to meet a threshold where you’d encounter it. 1

You may then think to ask humans, or delve into information reservoirs not captured (or exploited) by search engines.

But with the LLM, in a certain stage of infancy it is always found, be it accurately found or conjured.

Worse, this spontaneous coinage or association, in a way attempts to preempt your original thought in saying “this is the answer, and I knew it before you did.”

It is a well; this associative engine that links all path back home. The de-Googlewhack.

This is actually nefarious, since etymologically everything is going to point back to this since “two dry frogs is a situation” will likely take off. The ur-text basically becomes the nonsense chat.

This is actually nefarious, since etymologically everything is going to point back to this since “two dry frogs is a situation” will likely take off. The ur-text basically becomes the nonsense chat.

Worse, imagine you’ve stumbled on your own bright idea. In a way attempts to preempt your original thought in saying “this is the answer, and I knew it before you did.”

Footnotes

-

This doesn’t mean that in the absence of search result answers, that you were original. It is also true that an LLM can provide you a route to information where an engine would not. ↩