created, $=dv.current().file.ctime & modified, =this.modified

tags:attentionconsciousness

Attention and consciousness are two closely related psychological concepts that are often conflated, even among scholars. However, modern psychological and neurophysiological researchers can now independently manipulate top-down selective attention and perceptual consciousness.

- Can we become conscious of some object without attending to this object?

- Can we attend to objects that are consciously suppressed, that is, invisible?

- Can top-down selective attention and perceptual consciousness have opposing effects?

Attention is all You need

Introduction of the Transformer.

Trivia: Paper’s title is a reference to “All You Need Is Love” by The Beatles. Trivia: Early design document was called “Transformers: Iterative Self-Attention and Processing for Various Tasks” and included illustrations of Transformers characters.

attention (ML)

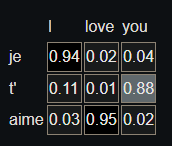

Simulates how human attention works by freely assigning appropriate levels of importance to different components of a sequence. In NLP this usually means assigning different levels of importance to each word in a sentence. Importance is determined by calculating “soft” weights for each token’s numerical representation - known as its embedding - within a specific section of the sentence called the context window. The calculation of these weights can occur simultaneously in models called transformers or one by one in recurrent neural networks

Photography?

It was also noticed that saccade control is modulated by cognitive processes, insofar as the eye moves preferentially towards areas of high salience. As the fovea of the eye is small, the eye cannot sharply resolve the entire visual field at once. The use of saccade control allows the eye to quickly scan important features of a scene.

NOTE

attn: We make the model construct a triple of vector: key, query and value. The rough idea is that we have a “database” in the form of a list of key-value pairs. The decoder send in a query, and obtain a reply in the form of a weighted sum of values, where the weight is proportional to how closely the query resembles each key.

Softmax function

Converts a vector of K real numbers into a probability distribution of K possible outcomes. It is a generalization of the logistic function to multiple dimensions, and used in multinomial logistic regression. The softmax function is often used as the last activation function of a neural network to normalize the output of a network to a probability distribution over predicted output classes.